Chris Schreiber

Most campus leaders I talk to are treating AI copyright compliance as something to worry about after the legal dust settles.The problem is that the "dust" is wet cement, and it's hardening around us...

Most campus leaders I talk to are treating AI copyright compliance as something to worry about after the legal dust settles.

The problem is that the "dust" is wet cement, and it's hardening around us right now.

I ran university residential networks in the early 2000s. We treated Napster and Limewire as simple bandwidth nuisances. Then the RIAA weaponized the DMCA, and we were managing hundreds of takedown notices every week instead of network performance. We became de facto copyright police, burning massive staff time processing legal demands because we hadn't built the policy guardrails early enough.

We're watching the same pattern repeat with AI, but the financial stakes are higher.

In November, the Munich Regional Court ruled ChatGPT infringes copyright when it reproduces song lyrics. Model “memorization” was found to be an infringement by the court. The shortest infringed work was just 15 words.

The court rejected OpenAI's text-and-data-mining defense. They drew a critical distinction: the exception applies to where analysis terminates and the shaping of parameters begins. OpenAI was found guilty of negligence at minimum, and the court denied them a six-month grace period because they should have understood the risk.

This establishes a "should have known better" standard that applies to institutions using AI tools.

The $1.5 billion Anthropic settlement in September shifts the risk model for campus budget officers. The precedent is clear: AI copyright liability isn't theoretical. It's an expensive reality.

You can't wait for total clarity because three external factors will hit your campus whether you're ready or not.

The Grant Compliance Reality

NIH will not consider applications that are developed by AI to be original ideas of applicants. If AI detection happens post-award, NIH may refer the matter to the Office of Research Integrity for research misconduct while taking enforcement actions including disallowing costs, withholding future awards, suspending the grant, and possible termination.

The trigger? NIH identified a principal investigator who submitted over 40 distinct applications in a single submission round, most appearing to be partially or entirely AI generated. This led to a new policy limiting Principal Investigators to six new, renewal, resubmission, or revision applications per calendar year.

NIH also prohibits reviewers from using AI to analyze grants. The confidentiality risk is severe: AI tools have no guarantee of where data are being sent, saved, viewed, or used in the future.

When your Vice President for Research realizes the grant pipeline is at risk because there's no governance framework, this becomes a Board-level priority.

The Cyber Insurance Renewal

When your cyber insurance renewal comes up, underwriters will ask: Do you have an inventory of AI tools? Do you have a governance policy? Do you control the data flow?

If your answer is "we're waiting for the dust to settle," they might not just raise your premiums. They might decline to cover you. Without coverage, the institution is flying blind.

The Shadow IT Reality

The risk is already on your network. It looks like a well-meaning administrator feeding financial aid data into a public chatbot, or a researcher accidentally leaking non-public intellectual property. You can't block that at the firewall.

The only way to mitigate it is to build a safe path so they have an alternative.

You should answer one fundamental question before you can build any strategy: As an institution, are we comfortable with institutional data, intellectual property, and other information being processed or stored in AI-enabled infrastructure we do not control, and over which we have minimal visibility into how that service will then further use and disseminate our data?

If the answer is no, then those free ChatGPT accounts and random department subscriptions aren't just shadow IT. They are active policy violations. You can't build a compliance strategy until you define this baseline expectation.

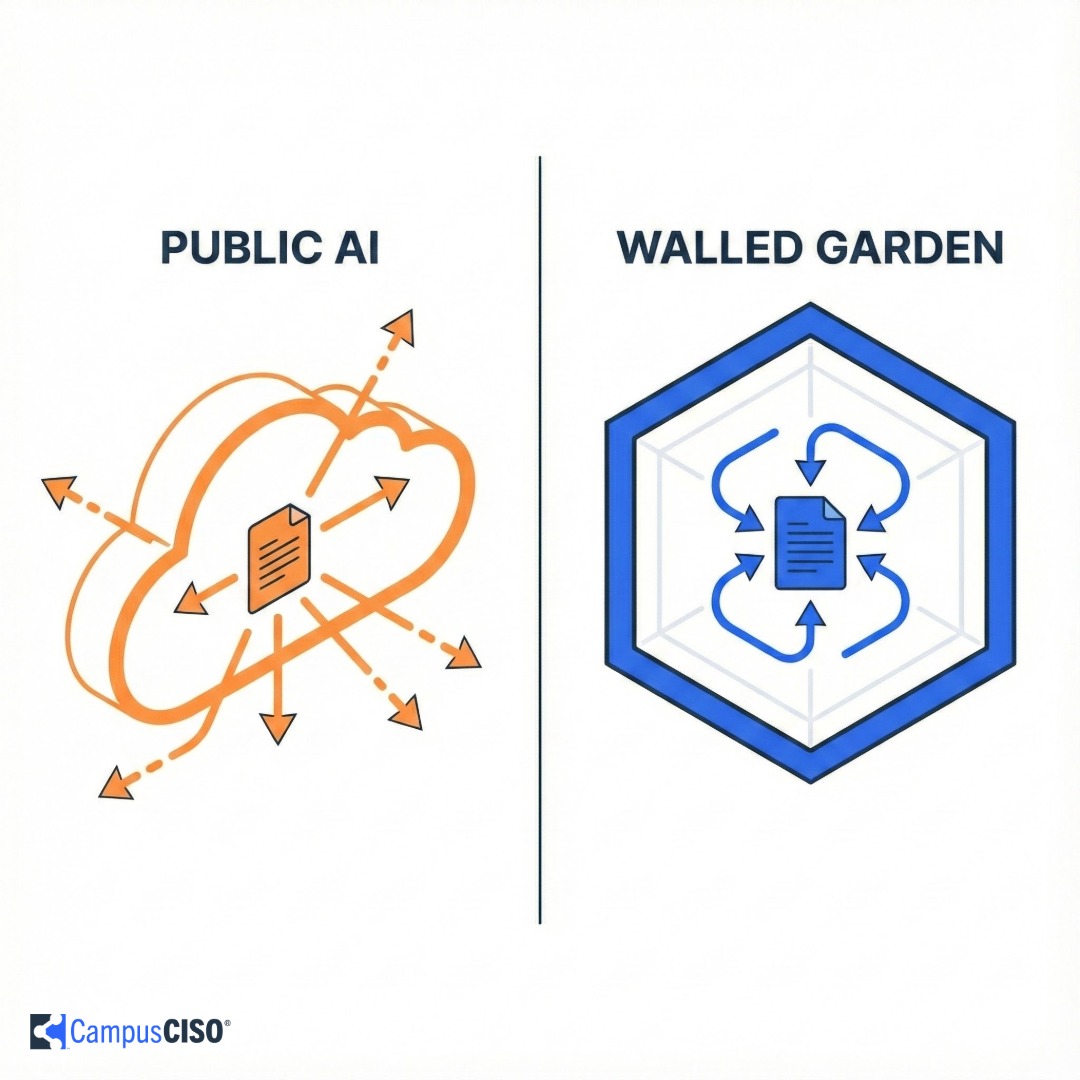

Once you have that answer, you have two architectural choices. You can build a walled garden by purchasing an enterprise license that contractually protects your data. Or, if you can't afford that structure, you lean on data governance. You train your high-risk users that if data is public, they can use open tools, but if it involves PII or proprietary research, they hit a hard wall. The policy isn't about banning the tool. It's about classifying the data that flows into it.

If you ask a faculty member to parse a thirty-page data privacy manual, you have already failed. Complexity is the enemy of compliance. A four-layer classification scheme works because it maps to actual workflows:

Public: Information intended for the open internet, like course catalogs or marketing copy. The risk here isn't secrecy. It's integrity. You don't want an AI hallucinating fake application deadlines.

Internal: Day-to-day business records like committee memos, drafts, operational emails. The standard friction of running the university.

Confidential: Student records (FERPA), HR files, grades. Leaks damage real people and the institution's reputation.

Regulated: The hard red line. PII, HIPAA health data, export-controlled research, or data with strict contractual breach notification clauses.

You can tell a professor: If the data is Public or Internal, you can use these approved tools. If it's Confidential or Regulated, you cannot put it into a public AI model. You build the logic into the process, not just the policy.

A department chair wants to use AI to help write performance reviews for staff. Technically, that's HR data, which is confidential. But they argue it's just helping them articulate feedback better.

Or a researcher wants to use AI to code interview transcripts (anonymized, yes, but still dealing with sensitive material).

You're not the sheriff of the university. You're the building inspector. In higher education's decentralized environment, you can't walk into a dean's office and dictate how they run their research.

Your opinion does not solve the gray area. You need to work toward an institutional consensus.

The decision framework relies on two questions. First, do we have an approved walled-garden AI tool for this? If yes, then writing performance reviews or analyzing interview transcripts is fine. We want people to use AI with confidential business data because that's often where the operational value lies. We just need them to do it inside the house.

Second, if they want to use a non-standard tool, how high is the friction to get approval? If you make the review process painful, they will just bypass you. You need a low-friction fast lane that evaluates the risk of that specific tool. Your goal isn't to block them. It's to show them a safe path to achieve their goals.

A mid-sized campus might be looking at $500K to $2M for enterprise AI licenses across the institution. That's real money competing with deferred maintenance, faculty salaries, and student support. If you walk into a CFO's office and try to sell a million-dollar license based on hypothetical loss avoidance, you already lost. Fear does not unlock budgets in higher education. Mission does.

You frame this investment like you frame deferred maintenance for a physical building. You wouldn't ask a chemistry professor to run a lab without fume hoods just to save money on ventilation. That isn't a cost saving. It's an operational failure. Enterprise AI is the new digital ventilation. It's core infrastructure.

The argument is that we need to give our faculty and staff the tools to build, research, and operate efficiently without hitting the walls of data privacy restrictions. We are investing in a capability that speeds up data analysis, optimizes administrative workflows, and opens new research doors. You aren't paying for safety. You are paying for the capacity to innovate without the friction of constant legal review.

Even if you win that budget battle and get the enterprise license, you still have the adoption problem. Faculty are already comfortable with free AI tools. They are fast and familiar, while your enterprise solution may have a clunkier interface and more steps. Universities should use shared governance to implement change. You cannot make this decision from the IT office. You should make it with the Faculty Senate.

Bring the problem to them: We have a risk that threatens our ability to hold federal grants. How do we solve it? When the governance body helps design the guardrails, it owns the outcome. The disruption from AI is moving faster and hitting harder than the rise of the Internet ever did. It touches teaching, administration, and research simultaneously. Because the impact is so wide, the consensus must be equally broad.

If the faculty helps build the wall, they are far less likely to climb over it.

You need to focus on three structural pillars.

First, map the actual usage. You cannot govern what you cannot see. Don't rely on network scanners to find AI usage. Go talk to your department heads. Ask them what tools they're using. Understand why they chose those tools. Help solve their problems instead of auditing their choices. You need to understand context before you can try governing the tools they use.

Second, build a dedicated governance function. Do not shove this into an existing IT steering committee. The impact of AI is too broad and touches too many areas of the institution. You need a focused working group, and it must have strong faculty and researcher representation. If the academic side isn't at the table, they will just build around you.

Third, get ink on paper. You don't need to invent this from scratch. Use the NIST AI Risk Management Framework as your starter kit. But you must draft and socialize proposed rules now. You need a rough blueprint to get feedback because people cannot critique a blank page.

In higher education, urgent often dies in committee. We have all seen the Cloud First or Mobile Ready initiatives that took a decade to land. But this wave is different. It's an existential operational threat. Innovation rarely forces a university to move fast, but risk to revenue can move mountains overnight. External factors are compounding, including issues like copyright law, insurability, and grant eligibility.

Federal agencies are no longer asking politely. If your researchers cannot certify how they are using AI, or if they inadvertently use a tool that leaks data or fabricates results, you don't just lose a lawsuit. You risk losing your federal funding eligibility. That is the lifeblood of a research university.

The $1.5 billion Anthropic settlement changed the math for insurers. When your cyber insurance renewal comes up next year, don't be surprised if your underwriters ask pointed questions about AI. If your answer is we're waiting for the dust to settle, they might not just raise your premiums. They might decline to cover you.

The risk is already inside the house. The only way to mitigate it is to build a safe path forward: invest in the walled garden or the data classification policy so your users know their options.

Most higher education AI conversation have focused on three concerns: confidentiality, academic integrity, and data sovereignty. We worried about students cheating, researchers leaking data, and server locations.

Copyright enforcement changes the equation. It's messier than confidentiality or integrity because it's not about keeping secrets or being honest. It's about ownership and liability. A tool purchased for efficiency can become a legal liability.

The ground is shifting. You can see that as an earthquake threatening your foundation, or as an opportunity to build a better architecture. Technology and security leaders can't just enforce policy for AI use. We need to guide institutions through this transition by creating a structure that enables safe experimentation.

Forget the short-term mandates that will be obsolete by summer. Put your energy into governance that lasts. Build relationships, committees, and trust structures that will still matter in 2035.

This isn't just a compliance project. It's building the support structure for how higher education will function with AI in the coming decades.

Start Your 30‑Day Diagnostic - $399

Build a data‑informed, board‑ready cybersecurity plan in 30 days.

Includes expert guidance, 30‑day access to the Cyber Heat Map® platform, and weekly group strategy sessions.

No long‑term commitment. Just results.

Secure your seat today.