Chris Schreiber

Colleges and universities don't operate like a corporation, so why would you apply corporate AI security frameworks to your campus?

Colleges and universities don't operate like a corporation, so why would you apply corporate AI security frameworks to your campus? As artificial intelligence transforms higher education, the unique characteristics of academic environments demand specialized approaches to cybersecurity that corporate playbooks cannot address.

The convergence of AI advancements with the distinctive culture of higher education creates security challenges that require tailored solutions. Understanding these differences is the first step toward developing effective AI governance for your institution.

Academic freedom stands as a fundamental principle in higher education that affects how you approach AI cybersecurity on campus. Unlike corporate environments where top-down security mandates are standard practice, your institution celebrates and protects the freedom to experiment, research, and explore new technologies.

"Limiting the ability to experiment and try new things really goes against the grain of what higher education stands for," explains Chris Schreiber, founder of CampusCISO and former chief information security officer at universities such as the University of Chicago and the University of Arizona.

This creates an immediate tension: how do you implement necessary security guardrails without constraining the intellectual exploration that defines your academic mission? The answer lies not in restrictive policies, but in enabling secure innovation through thoughtful infrastructure.

Forward-thinking institutions are addressing this challenge by deploying their own AI environments with built-in protections. Rather than telling faculty and researchers what they can’t do, these schools are establishing supported platforms that preserve academic freedom while implementing security guardrails.

“Some of the more forward-looking institutions have embraced AI early, often by standing up their own AI environment for use by faculty and students,” notes Schreiber. “Instead of telling people ‘no, don’t use AI,’ they’ve tried to get ahead of it by enabling a solution that has guardrails in place.”

This approach not only upholds the institution's core values, but also addresses the complexities arising from decentralized IT structures.

Most large institutions operate more like a federation of semi-autonomous departments than a unified organization. This decentralized structure—another defining characteristic of higher education—creates significant security challenges for AI governance.

With individual colleges, research labs, and academic units managing their own IT resources, central oversight becomes difficult. For example, a business school might deploy sophisticated AI analytics tools while humanities departments operate on entirely different technical frameworks.

Schreiber points out this reality: “Higher education is decentralized, and individual researchers and professors might get independent funding. So they’re not always using IT services provided by a central IT organization. Often, faculty have separate IT environments that are financed as part of their research.”

This decentralization means campus cybersecurity leaders frequently lack authority to mandate AI usage policies. Instead, successful approaches focus on education, collaboration, and providing secure options that departments want to use.

“When you have decentralized IT environments, it’s more like working across a federation of IT groups,” Schreiber explains. “The central IT organization and the chief information security officer often lack direct authority to mandate what to do and how to do it. So they focus on education, teaching people what the risks are, encouraging people to approach things in a risk-aware manner.”

Most institutions are built upon principles of knowledge creation and sharing—values conflicting with traditional cybersecurity approaches focused on data containment. This creates yet another dimension where corporate security frameworks fall short in higher education.

“Higher education fosters a very open and collaborative environment,” says Schreiber. “Schools were created to create new knowledge, to share that knowledge with others, and going back even over centuries, that’s really what the centers of learning have been about.”

This collaborative culture makes restricting data flow challenging. Yet introducing AI tools—especially those that incorporate user data into their training models—creates even more risk around potential sharing of sensitive information.

The solution is not to restrict collaboration, but to implement protections based on data classification. Schreiber recommends: “Institutions should think about data classification and data governance. What types of data does the institution manage and what are the risks associated with that data? That needs to be the foundational element of how they approach AI governance.”

Several leading universities are developing innovative models that balance security needs with academic values. “They’ve deployed the AI solutions with guardrails so that data that submitted through those tools can stay isolated and doesn’t become part of the global training model for that AI vendor’s other customers,” explains Schreiber.

These protected environments give your researchers and faculty freedom to experiment with AI while maintaining control over sensitive data, which is important for research involving sensitive data, healthcare information, or personally identifiable information.

Some universities that have developed their own AI solutions designed to ensure data privacy and security include:

1. University of Chicago’s Phoenix AI

The University of Chicago has developed Phoenix AI, a generative AI platform tailored to meet the specific needs of its academic community. This in-house solution emphasizes data privacy and security, allowing researchers and staff to utilize AI tools without compromising sensitive information. (https://genai.uchicago.edu/)

2. University of Michigan’s U-M GPT

The University of Michigan launched U-M GPT, an internally developed generative AI tool. Hosted within the university’s secure infrastructure, U-M GPT assists faculty, researchers, and students in various tasks, ensuring that data privacy and intellectual property are protected. (https://genai.umich.edu/)

3. University of California, Irvine’s ZotGPT

UC Irvine introduced ZotGPT, an AI chatbot designed to support faculty and staff with tasks such as creating class syllabi and writing code. Built using Microsoft’s Azure platform, ZotGPT addresses privacy and intellectual property concerns by keeping data within the university’s secure environment. (https://zotgpt.uci.edu/)

4. Washington University’s WashU GPT

Washington University developed WashU GPT, a generative AI platform that offers a secure alternative to commercial AI tools. By building their own model, the university ensures that data privacy and intellectual property are safeguarded, providing a trusted resource for their academic community. (https://genai.wustl.edu/tools/chatgpt/)

5. National Louis University’s Retain

National Louis University in Chicago created Retain, an AI-assisted system designed to help identify at-risk students and equip campus community members with data. This in-house software pulls relevant data from campus systems to create a comprehensive view of student information, enabling real-time, data-based decision-making while ensuring data privacy. (https://www.insidehighered.com/news/student-success/academic-life/2024/12/16/national-louis-u-empowers-staff-data-ai-tools)

Higher education institutions face distinct AI-related security vulnerabilities that rarely exist in corporate environments. The combination of open networks, minimal application controls, and distributed IT management creates an expanded attack surface that requires more thoughtful protection strategies.

Perhaps the most significant vulnerability is the prevalence of shadow IT. "Shadow IT" refers to the use of information technology systems, devices, software, applications, and services without explicit IT department approval. In higher education, decentralized structures magnify this problem.

Schreiber points out how higher educations are especially vulnerable to shadow IT across distributed IT systems. “In a corporate setting, you often have much tighter control over how your data can come in and out of the environment. Not many colleges and universities have a similar level of control over their entire network.”

This allows users to upload sensitive institutional data to AI systems without the security team’s knowledge. “It’s very easy for somebody to buy a $30 a month AI tool and upload sensitive research data into that tool, and nobody on the institution’s information security team would be any the wiser,” warns Schreiber.

While emerging AI threats like prompt injection and model poisoning will affect all sectors, higher education’s distributed nature makes defense coordination particularly challenging. Your security approach must account for this reality by focusing on visibility, education, and policy frameworks appropriate for academic environments.

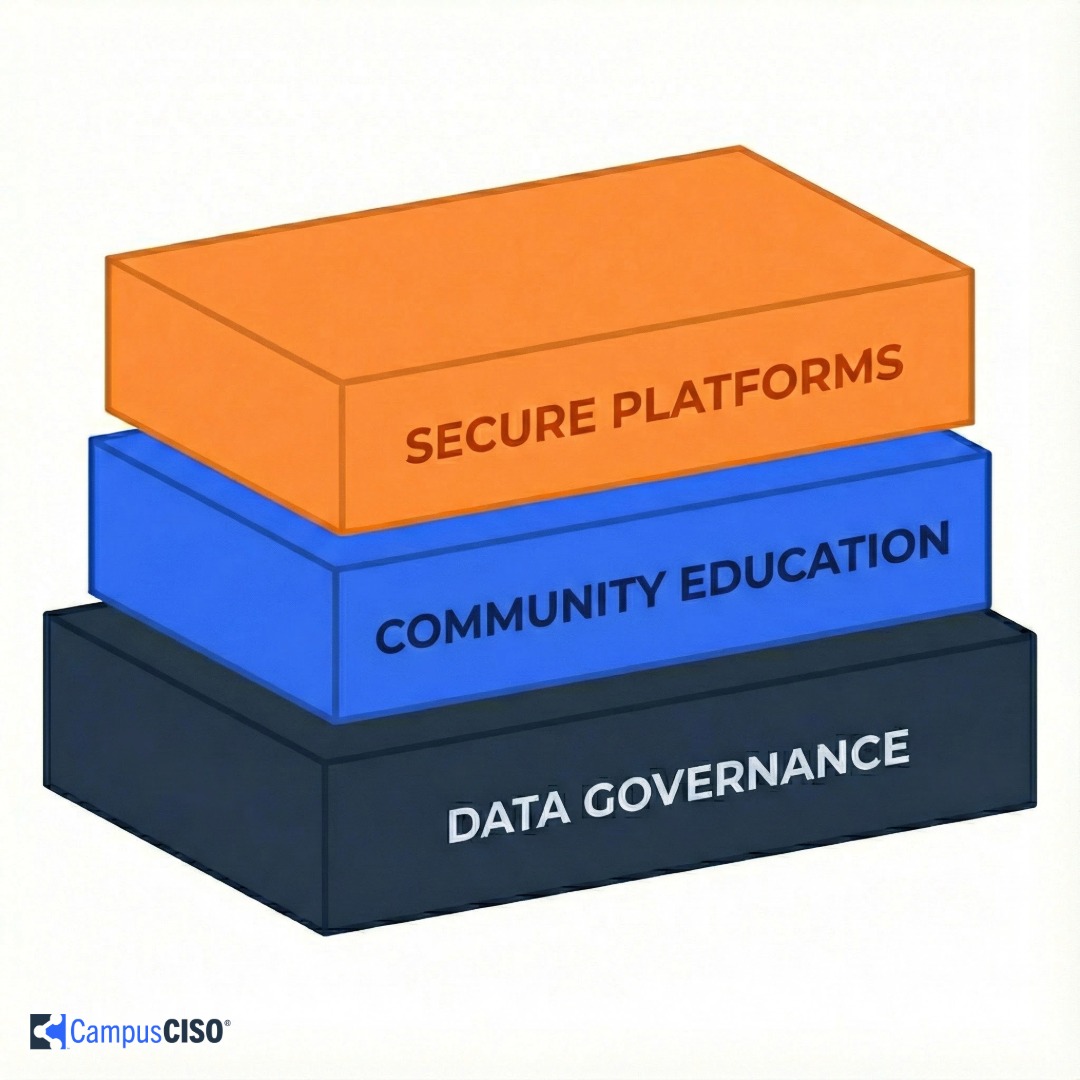

Developing effective AI governance for your institution requires a multi-layered approach that acknowledges academic realities while protecting sensitive data. A successful framework begins with establishing robust data classification and governance policies.

“Step one is having that data classification and data governance policy in place,” Schreiber advises. “Step two is to educate all of your users that may be interacting with sensitive data about your data classification policies and what the requirements are to protect that data.”

The third component involves providing clear guidance about which AI tools are appropriate for different data classifications. This approach respects academic freedom while establishing necessary boundaries for truly sensitive information.

“There’s nothing wrong with letting people play with AI, even if it’s just something that they access for free on their own. If they’re not putting sensitive data into it and they’re not exposing anything that creates a risk to the institution, you really don’t want to infringe on people’s ability to explore and learn more about AI.”

Unlike corporate environments where technology controls often take precedence, a higher education AI security strategy should emphasize education and awareness. This aligns with academic culture and reflects the realities of decentralized IT environments.

“Some institutions have actually incorporated AI training into their user training, especially for staff who are involved in research or handling health care or other types of sensitive data,” Schreiber observes. While AI-specific security training is still emerging, incorporating guidance into existing security awareness programs represents an important starting point.

This educational approach should be collaborative rather than restrictive. “Institutions should be careful that they don’t become the ‘no’ organization that tells people that they can’t use AI,” cautions Schreiber. “Instead, institutions should train people how to use AI safely and who to come to if you have questions about how to use it safely.”

Institutions that position security teams as partners rather than obstacles see better compliance and develop stronger relationships with academic departments—a critical factor in environments where mandatory controls are difficult to implement.

For smaller institutions, identifying specific areas where AI may pose risks is crucial. However, smaller colleges and universities can still implement effective AI security measures through strategic approaches that match their capabilities.

“Smaller institutions should identify specific areas where they know that AI may not be safe. So again, this goes back to that idea of understanding where you have sensitive data, who handles that sensitive data, and what training you provide to those individuals,” recommends Schreiber.

Leveraging existing partnerships with technology providers represents another practical strategy. “Many institutions already work with large companies that are embedding AI into their solutions, whether it’s somebody like Adobe or Microsoft or Google. They’re all developing AI solutions, and almost every institution is using one or more of those three companies,” Schreiber points out.

By using current software licenses and creating usage policies, institutions can securely implement AI capabilities without major additional investment. It is important to ensure these approved tools are accessible and known to users.

Regardless of company size, Schreiber stresses data handler training on the risks of self-procured AI. “The key is making sure that solutions are available, because if you don’t provide something proactively, your users are going to go out and find something.”

As AI technologies evolve rapidly, institutions need a cybersecurity approach that remains relevant through technological change. This requires focusing on foundational principles rather than specific technologies.

Campus-wide AI use needs monitoring, but avoid creating a climate discouraging open use and collaboration. “One of the things that cybersecurity teams need to be thinking about is how do they identify what AI solutions are in use, by who, and what kinds of data are going into those solutions,” suggests Schreiber.

This inventory approach works best when positioned as collaborative rather than disciplinary. “Approach it more as a census exercise,” Schreiber advises. “The big thing is to make sure that it does not become punitive, so that people feel comfortable sharing this information with the security team.”

When evaluating or building institutional AI platforms, security teams should focus on data isolation and emerging threat monitoring. “Where does the data live and what guardrails can you put around it? Are you able to set up a solution where data only goes into your environment and never becomes part of a broader training model,” Schreiber recommends as a primary consideration.

Equally important is staying current with AI-specific vulnerabilities as they emerge. “Just like when web applications started popping up 20 years ago, we had to spend a lot of time educating developers and IT administrators about things like cross-site scripting and SQL injection attacks. We’re seeing the same type of evolution with AI solutions,” Schreiber notes.

Institutions’ approach to AI cybersecurity must be as unique as their academic environment. By building frameworks that acknowledge the realities of academic freedom, decentralized IT structures, and collaborative cultures, IT leaders can protect sensitive data while enabling the innovation that defines higher education.

The most successful strategies will balance security needs with academic values, focusing on data classification, community education, and providing secure options that respect institutional culture. As AI continues to transform education, striking a balance between security and the open exploration that drives academic progress becomes increasingly critical.

By proactively developing and implementing tailored AI cybersecurity strategies, institutions can safeguard their communities and foster an environment where innovation thrives.

Start Your 30‑Day Diagnostic - $399

Build a data‑informed, board‑ready cybersecurity plan in 30 days.

Includes expert guidance, 30‑day access to the Cyber Heat Map® platform, and weekly group strategy sessions.

No long‑term commitment. Just results.

Secure your seat today.